How to scrape a website with Python & BeautifulSoup

There's a lot of data on websites in the Internet. Some data is provided via APIs (and I'm currently writing a Humane Guide for people who want to learn the fundamentals of calling APIs, more of that later) but quite a lot of data is just static and displayed in HTML across websites.

One could manually copy-paste the data into editor, do a lot of clean-up and then reuse it for computation but as developers, we have better tools for automating them. In this post, I'll share how you can scrape data from a website using Python, few libraries to fetch the data and BeautifulSoup library to parse the data.

This blog post is using Python 3.7.2.

Note about permissions

Just because a piece of data is publicly on a website, it does not mean that you can do whatever you want to retrieve and reuse it. I am not a lawyer and thus, I won't provide any advice here on legality of the tasks. Please consult legal experts on these issues before building bots that scrape the Internet.

Primer to BeautifulSoup

BeautifulSoup is a Python library that parses XML and HTML strings and provides you access to the data in an easier format to consume in your applications and scripts. To get started, we need to install BeautifulSoup.

pip install beautifulsoup4

To make sure everything is working correctly, let's try to replicate the example from BeautifulSoup's documentation:

from bs4 import BeautifulSoup

html_doc = """

<html><head><title>The Dormouse's story</title></head>

<body>

<p class="title"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>,

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>;

and they lived at the bottom of a well.</p>

<p class="story">...</p>

"""

soup = BeautifulSoup(html_doc, 'html.parser')

for link in soup.find_all('a'):

print(link.get('href'))By saving the previous example in a Python file and running it, you should see the following output:

http://example.com/elsie

http://example.com/lacie

http://example.com/tillieSo what happened here? First, we need to import BeautifulSoup (line 1). After that, we use some example HTML stored as a string in a variable called html_doc and provide that as a first argument to BeautifulSoup() constructor. The second argument is for defining the parser that you want to use. There are multiple parsers you can use and the documentation has a nice comparison table for them.

After we've created a BeautifulSoup object, we can call methods on it to query different data. In this case, we call soup.find_all which allows you to search the tree for elements based on arguments. Here we pass in the 'a' to signify that we want all the anchor tags. We then loop over and print the href attribute of each anchor tag.

Searching the tree

BeautifulSoup provides us with different methods to search the tree. Let's take a look at two of them: find and find_all.

find always searches and returns the first match. If in the previous example, we would have used find instead of find_all, we would have only gotten the first anchor tag rather than all three. find_all, like the name suggests, returns all matches.

First argument to these methods is called a filter. You can provide a string (like 'p' to match paragraphs), a regular expressions (like re.compile(r'h\d') to match all headings (tags with h and any single digit)), a list of strings or regular expressions (like ['tbody', 'thead']) , a True to match all tags (but not text content) and finally, a custom function which allows you to do more complex predicates.

In addition to searching for tags, you can provide these methods with keyword arguments to search & filter by different HTML attributes.

for sister in soup.find_all('a', class_='sister'):

print(sister)

# Prints

# <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>

# <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>

# <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>

for link1 in soup.find_all('a', id="link1"):

print(link1)

# Prints

# <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>

for example in soup.find_all('a', href=re.compile('.*example.com.*')):

print(example)

# Prints

# <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>

# <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>

# <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>Accessing data and attributes

Now that we know how to search specific elements, let's see how to access data within them.

# Let's take the first anchor tag

first_sister = soup.find('a')

# Access the tag name

print(first_sister.name) # Prints 'a'

# Access the HTML attributes

print(first_sister.attrs) # Prints {'href': 'http://example.com/elsie', 'class': ['sister'], 'id': 'link1'}

# Access the text content

print(first_sister.string) # Prints 'Elsie'

# You can also use (see below paragraph for difference between text and string)

print(first_sister.text) # Prints 'Elsie'On a simple example like this, .text and .string seem to do the same but there are some differences you should be aware of:

.text

.text returns a concatenated string of all the text content from all children nodes.

text_example = '<p>Hello <span>World</span></p>'

soup = BeautifulSoup(text_example, 'html.parser')

print(soup.find('p').text)

# Prints 'Hello World'.string

On the other hand, .string returns a NavigableString object which allows us to search further using methods like find and find_all.

text_example = '<p>Hello <span>World</span></p>'

soup = BeautifulSoup(text_example, 'html.parser')

print(soup.find('p').string) # Prints None

print(soup.find('p').find('span').string) # Prints WorldIt only returns the string content if there is a single or no children.

.attrs

.attrs returns a dictionary with all the HTML attributes present in the element. You can only access individual attributes directly by indexing the tag element like you would a dictionary:

# Let's take the first anchor tag

first_sister = soup.find('a')

# We can access href in three ways:

print(first_sister.attrs['href']) # Prints http://example.com/elsie

print(first_sister['href']) # Prints http://example.com/elsie

print(first_sister.get('href')) # Prints http://example.com/elsieSome HTML attributes allow you to have multiple values, like class. In these cases, accessing the attribute returns a list instead of a string.

html = '<div class="first second third">Hello world!</div>'

soup = BeautifulSoup(html)

print(soup.find('div').attrs['class']) # Prints ['first', 'second', 'third']This happens even if there is only one class:

html = '<div class="first">Hello world!</div>'

soup = BeautifulSoup(html)

print(soup.find('div').attrs['class']) # Prints ['first']Let's parse a real website!

Alright, I know you're probably eagerly waiting to leave these toy examples behind and parse something in the real world. Let's do that! For this example, I'm using my own website and especially its /speaking page.

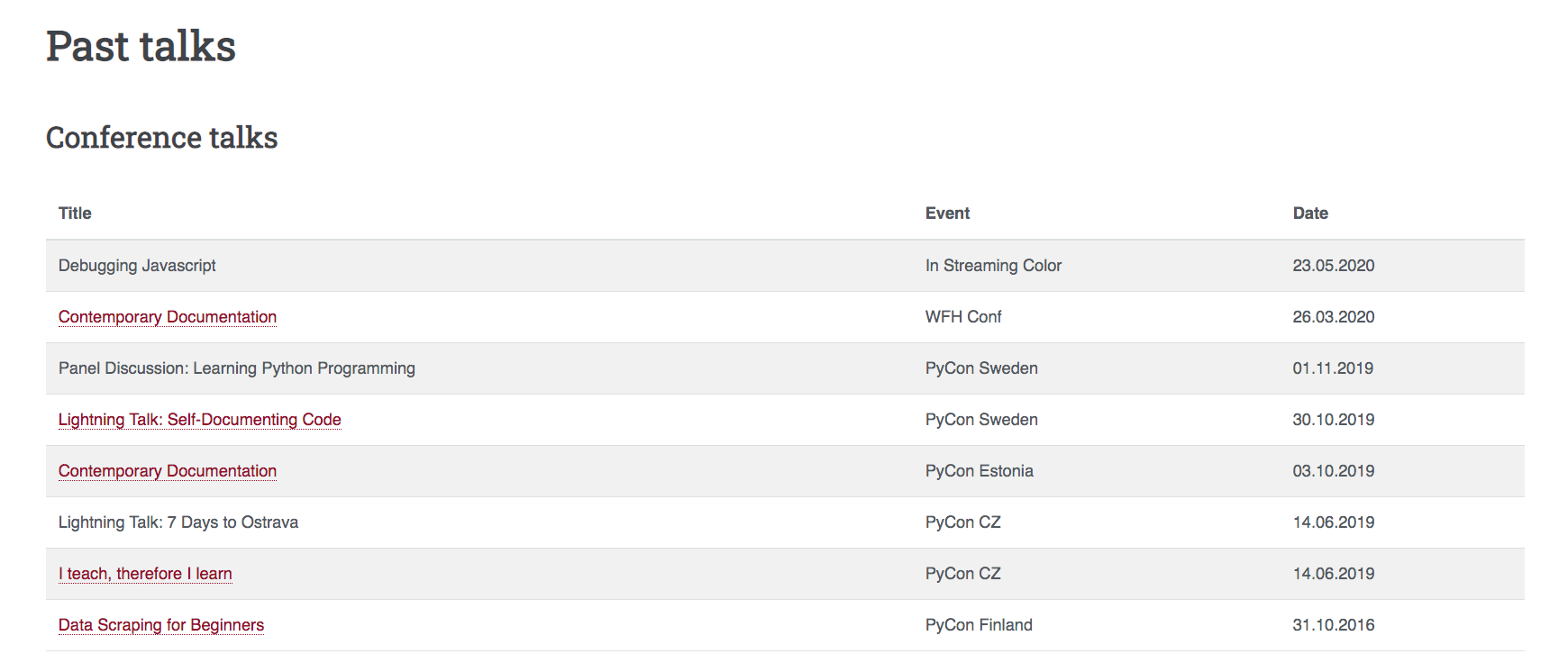

At the time of writing, the first table, conference talks, looked like the above screenshot.

Our goal is to parse all of my talks that have a link to a Youtube video and print them out.

To get access to that HTML, we first need to do a HTTP request to the server to get the data and for this, there are multiple ways to do it in Python. For static sites like this, I usually use requests library.

pip install requestsNow we can use requests to fetch the website and feed that into our BeautifulSoup

import requests

from bs4 import BeautifulSoup

URL = 'https://hamatti.org/speaking/'

page = requests.get(URL)

soup = BeautifulSoup(page.text, 'html.parser')

# If you get 'Speaking : hamatti.org', all is good!

print(soup.find('title').text) At this point, I usually open the DOM Inspector in my Developer Tools (you can also decide to View Source) to see what the structure of the site looks like and try to find items I'm interested in.

In this case, we have four different tables: Conference Talks, Meetup Talks, Podcasts and Startup Accelerators. The links to videos are in first column but not all talks have links.

tables = soup.find_all('table')

tbodies = []

for table in tables:

tbody = table.find('tbody')

tbodies.append(tbody)

first_columns = []

for tbody in tbodies:

trs = tbody.find_all('tr')

for tr in trs:

td = tr.find('td')

first_columns.append(td)

links = [td.a for td in first_columns if td.find('a')]

youtube_links = [link for link in links if 'youtube' in link['href']]

for link in youtube_links:

print(f'{link.text}: {link["href"]}')Parsing websites often end up in quite messy looking scripts. And website structure, classes and ids often change so if you're planning to use this kind of a script in an automated environment, be ready for some failing scripts and maintenance down the line.

My workflow with parsing the web is very iterative. I usually work in Python shell/REPL and work my way through the soup step by step with a lot of trial and error. Once I find something that works, I copy it to a script and at the end, clean it up a bit.

Dynamic websites and working with Javascript

Using requests is good when the website is static, as in the data is in when it gets returned from the server. However, sometimes that's not the case and websites start with empty divs and fill in the data by using API calls and Javascript. In those cases, you will receive the empty div when trying to use requests and BeautifulSoup.

For these cases, we need to use a headless browser that loads the website, renders it, runs Javascript and then gives us the resulting data. There are multiple options for that but one that is very popular is selenium. Selenium allows us to automatically run browser windows, navigate to websites, interact with fields and buttons and it executes the Javascript run on the page.

pip install seleniumIn addition to installing selenium itself, you also need to install drivers for the browsers you want to use. In this example, I'm running Firefox so I'd install geckodriver. Selenium's documentations has information on different drivers and links to their installation pages.

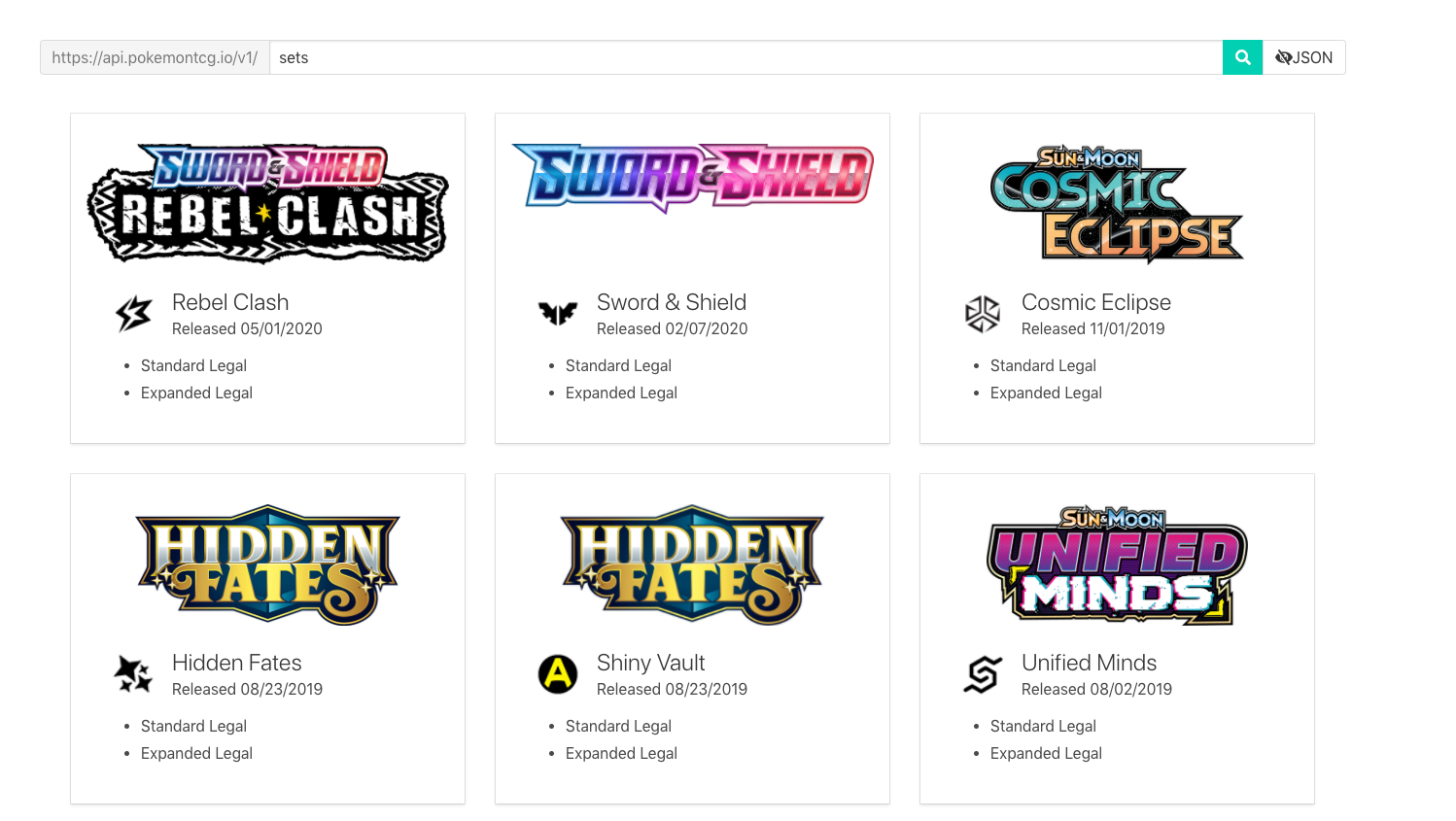

For this example, let's use the website of one of my favorite APIs, PokemonTCG.io. When visited, the /sets subpage gives us a nice visual representation of different sets.

In reality, you would not scrape this particular website as they offer an open API to get all the same data. I'm just using it as an example of a website that injects data into DOM with Javascript.

If we would try to access those by using what we did earlier, we'd end up with empty results:

import requests

from bs4 import BeautifulSoup

URL = 'https://pokemontcg.io/sets'

resp = requests.get(URL)

soup = BeautifulSoup(resp.text, 'html.parser')

print(soup.find('div', class_='set-gallery')) # Prints NoneThis is because after the site has loaded in browser, it calls API to get the sets data and then injects that into a div with class set-gallery.

Using Selenium gives us the power to run the website in a real browser, wait for it to load and then access the populated DOM.

import re

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions

from bs4 import BeautifulSoup

URL = 'https://pokemontcg.io/sets'

driver = webdriver.Firefox()

driver.get(URL)

elements = WebDriverWait(driver, 10).until(

expected_conditions.presence_of_all_elements_located((By.CSS_SELECTOR, '.card-image')))

html = driver.page_source

driver.quit()

soup = BeautifulSoup(html, 'html.parser')

sets = soup.find_all('a', class_='card', href=re.compile(r'/cards.*'))

for set_ in sets:

set_name = set_.find('p', class_='title').text

set_release_info = set_.find(

'p', class_='subtitle').text.replace('Released ', '')

print(f'{set_name} was released on {set_release_info}')First, we import our libraries. re and BeautifulSoup are already familiar from previous examples so let's take a look at what we import from selenium.

First, we need the webdriver as that will be used to run Selenium. We also import WebDriverWait which we can use to force Selenium to wait for certain actions before closing the browser. By allows us to access different ways of filtering HTML elements (see line 16), and finally expected_conditions is used to tell Selenium what conditions we want to be matched. Depending on what you're doing, you might not need all of these or you might need more. Reading the documentation helps you find out what you need.

On lines 11-12, we open Firefox and navigate to our URL. Then we tell Selenium to wait until elements with class card-image are present before closing the window. It took me a couple of attempts to find good elements to refer to make sure data in this use case was populated correctly.

After that, we get access to the HTML source and we close the browser.

Now, we do what we've done before: finding all sets (in this case they are a tags with certain classes and URLs), scraping the names and release info and doing some data cleanup before printing all of them into the standard output.

Wrap-up

We have now learned how to parse HTML into BeautifulSoup and use it to find the data we want. We also learned how to use requests and selenium to access the data on live websites. What's next? Time for you to experiment, write your own scripts, read more on these libraries' documentation pages and build something nice for yourself!

If something above resonated with you, let's start a discussion about it! Email me at juhamattisantala at gmail dot com and share your thoughts. This year, I want to have more deeper discussions with people from around the world and I'd love if you'd be part of that.